While the pattern descriptions and diagrams are sourced from Anthropic’s original publication, we’ll focus on how to implement these patterns using Spring AI’s features for model portability and structured output. We recommend reading the original paper first.

The agentic-patterns project implements the patterns discussed below.

While the pattern descriptions and diagrams are sourced from Anthropic’s original publication, we’ll focus on how to implement these patterns using Spring AI’s features for model portability and structured output. We recommend reading the original paper first.

The agentic-patterns project implements the patterns discussed below.

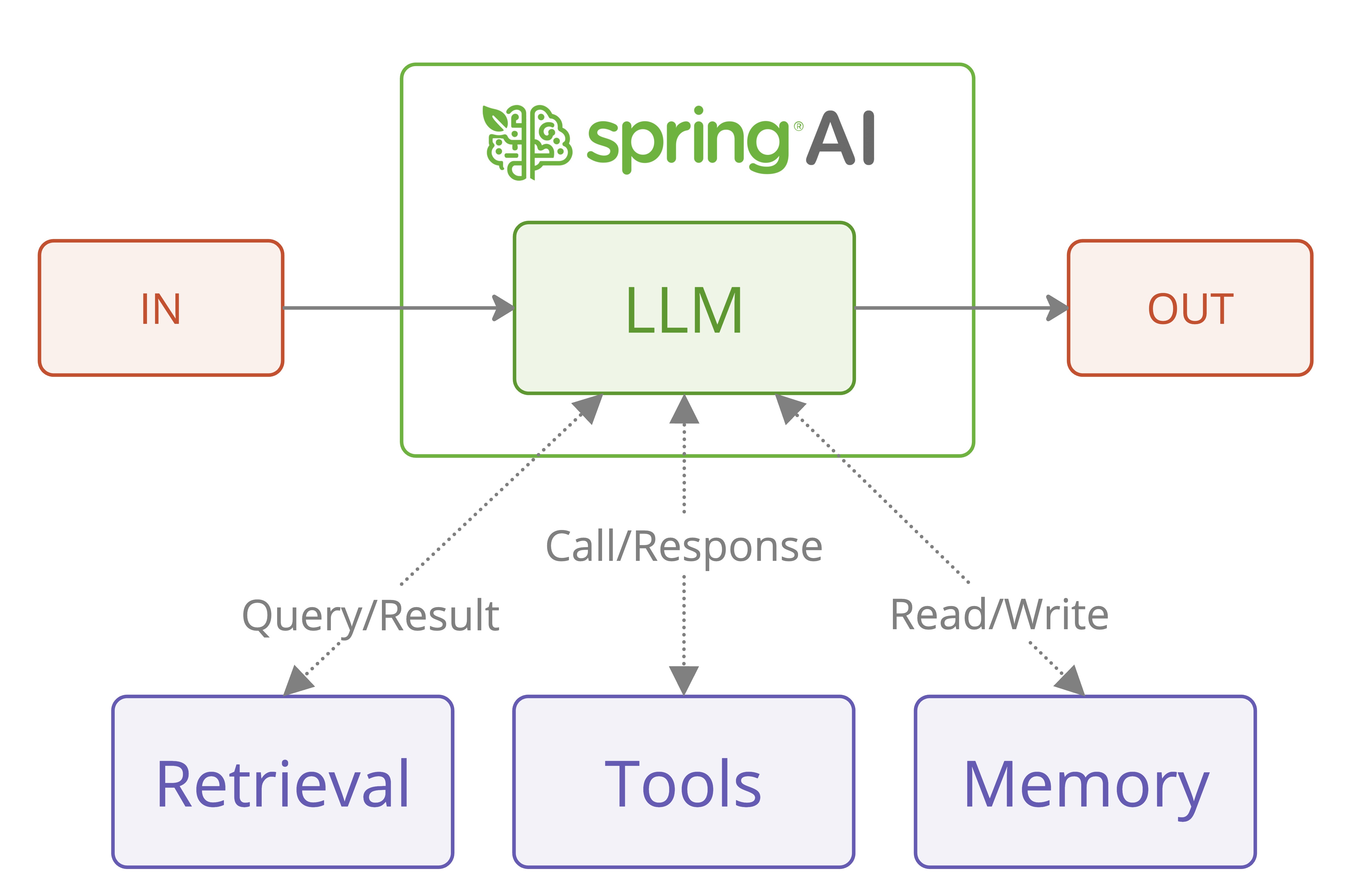

Agentic Systems

The research publication makes an important architectural distinction between two types of agentic systems:- Workflows: Systems where LLMs and tools are orchestrated through predefined code paths (e.g., prescriptive system)

- Agents: Systems where LLMs dynamically direct their own processes and tool usage

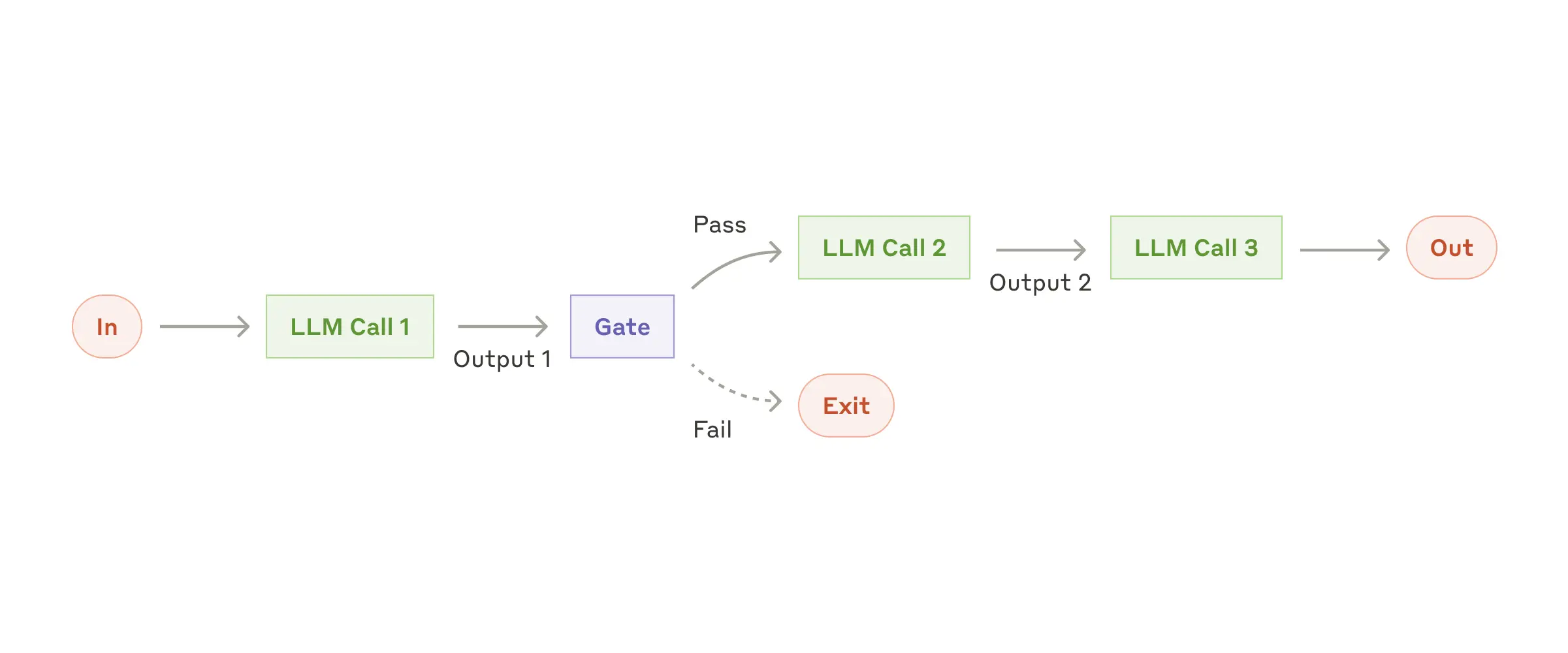

1. Chain Workflow

The Chain Workflow pattern exemplifies the principle of breaking down complex tasks into simpler, more manageable steps. When to Use:

When to Use:

- Tasks with clear sequential steps

- When you want to trade latency for higher accuracy

- When each step builds on the previous step’s output

- Each step has a focused responsibility

- Output from one step becomes input for the next

- The chain is easily extensible and maintainable

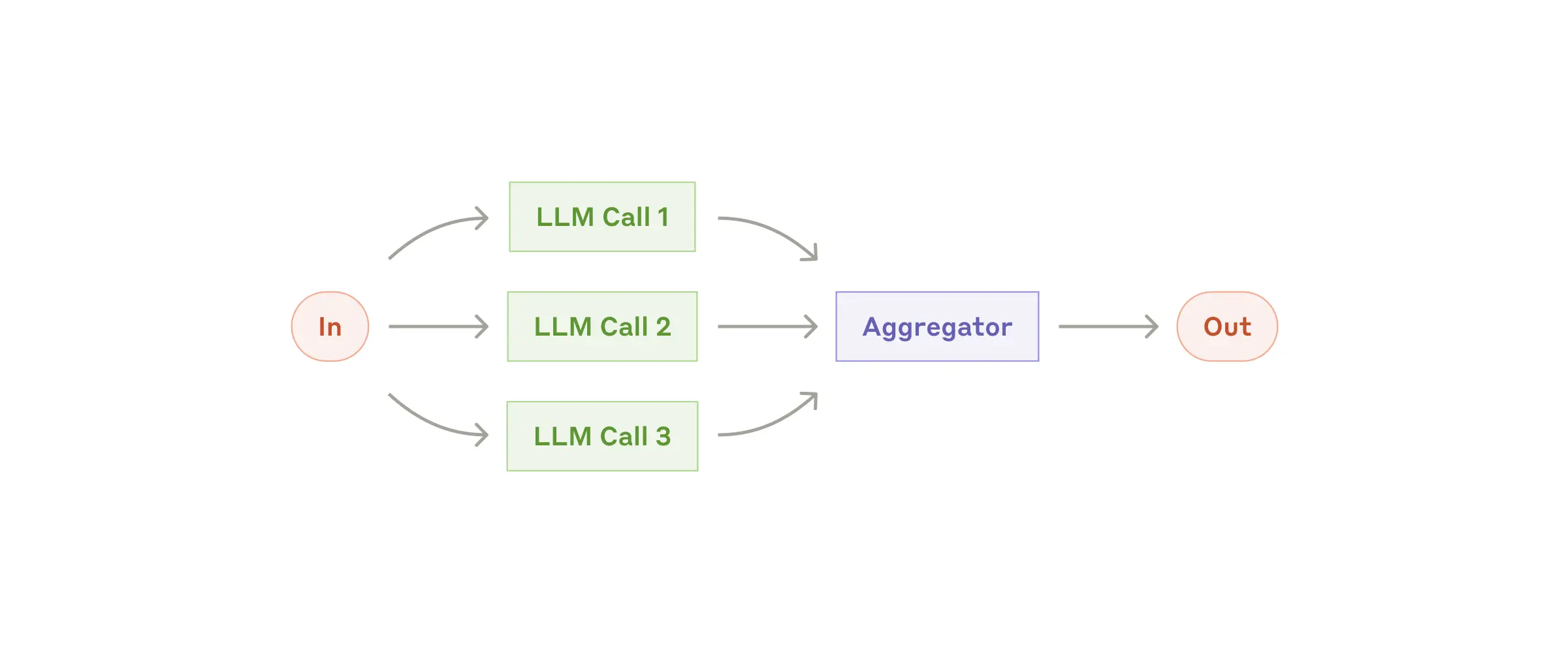

2. Parallelization Workflow

LLMs can work simultaneously on tasks and have their outputs aggregated programmatically. The parallelization workflow manifests in two key variations:- Sectioning: Breaking tasks into independent subtasks for parallel processing

- Voting: Running multiple instances of the same task for consensus

When to Use:

When to Use:

- Processing large volumes of similar but independent items

- Tasks requiring multiple independent perspectives

- When processing time is critical and tasks are parallelizable

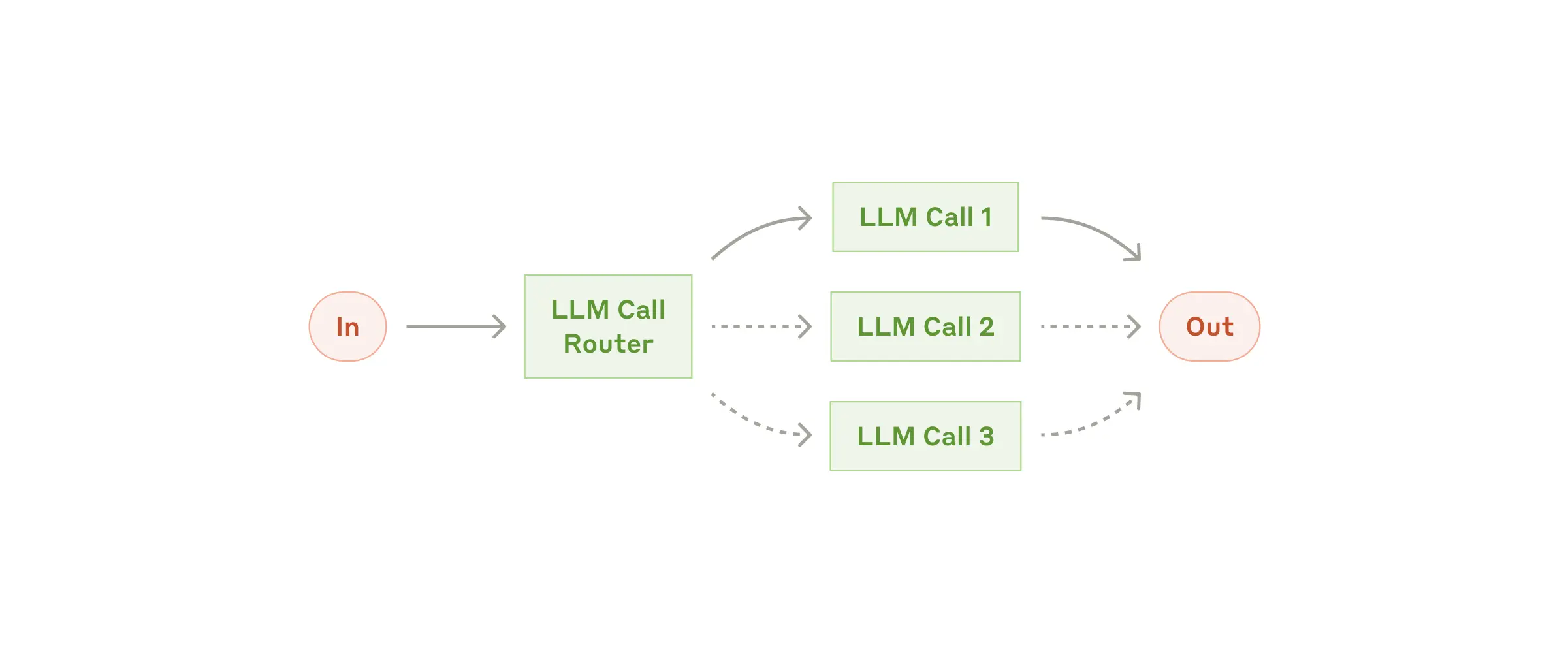

3. Routing Workflow

The Routing pattern implements intelligent task distribution, enabling specialized handling for different types of input. This pattern is designed for complex tasks where different types of inputs are better handled by specialized processes. It uses an LLM to analyze input content and route it to the most appropriate specialized prompt or handler.

When to Use:

This pattern is designed for complex tasks where different types of inputs are better handled by specialized processes. It uses an LLM to analyze input content and route it to the most appropriate specialized prompt or handler.

When to Use:

- Complex tasks with distinct categories of input

- When different inputs require specialized processing

- When classification can be handled accurately

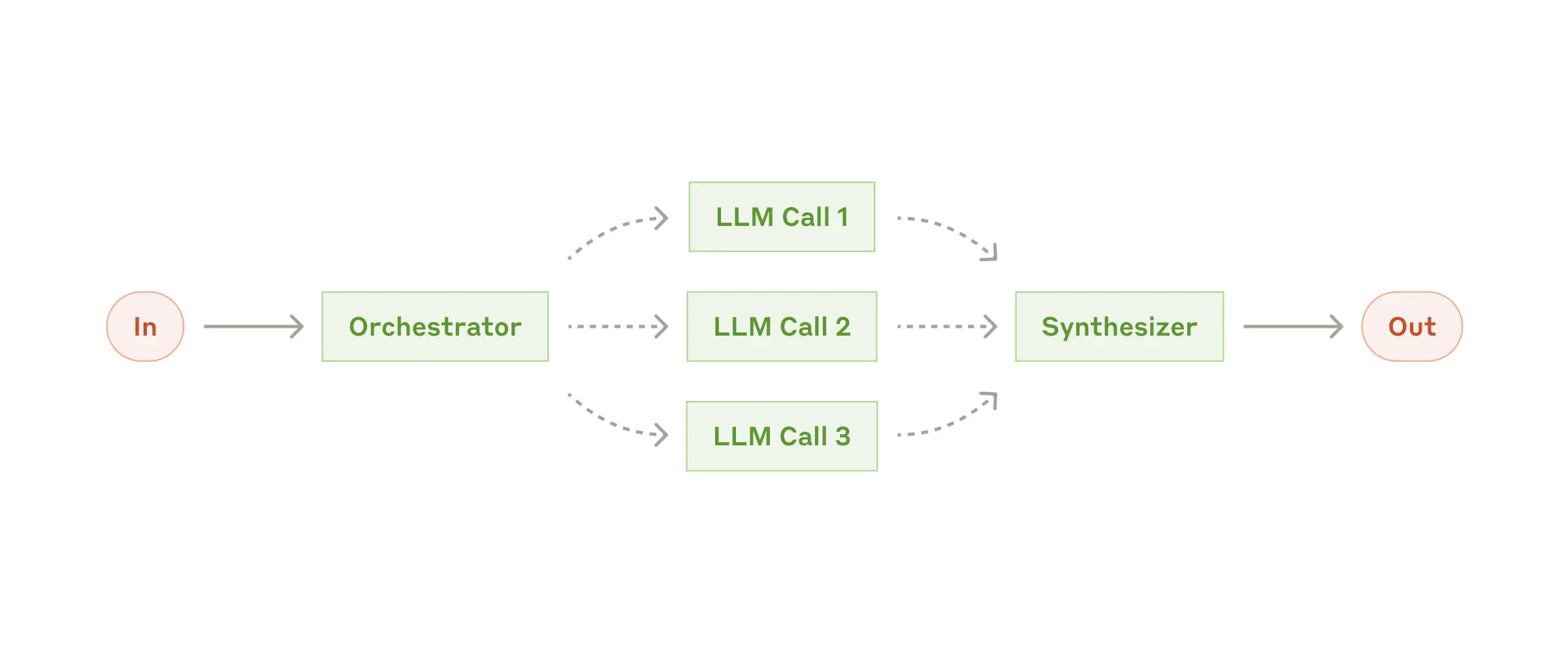

4. Orchestrator-Workers

This pattern demonstrates how to implement more complex agent-like behavior while maintaining control:- A central LLM orchestrates task decomposition

- Specialized workers handle specific subtasks

- Clear boundaries maintain system reliability

When to Use:

When to Use:

- Complex tasks where subtasks can’t be predicted upfront

- Tasks requiring different approaches or perspectives

- Situations needing adaptive problem-solving

Usage Example:

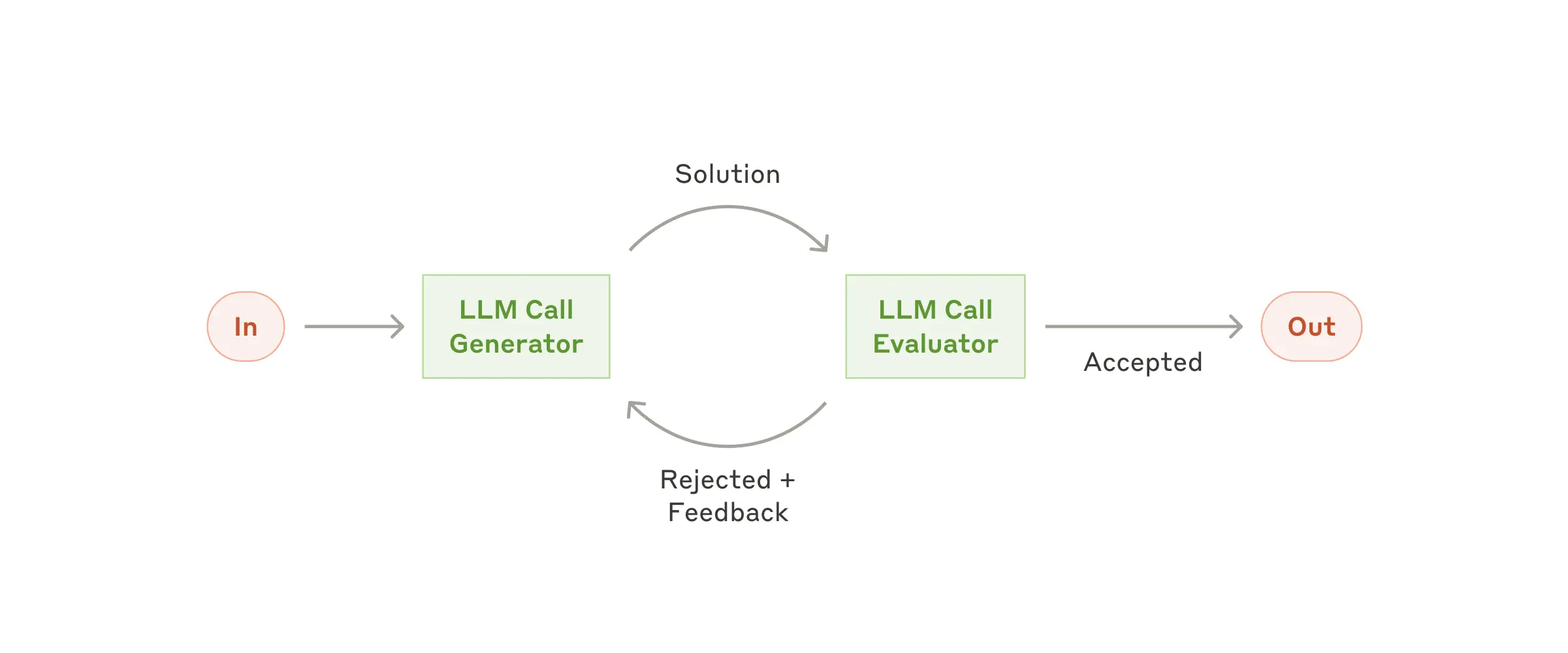

5. Evaluator-Optimizer

The Evaluator-Optimizer pattern implements a dual-LLM process where one model generates responses while another provides evaluation and feedback in an iterative loop, similar to a human writer’s refinement process. The pattern consists of two main components:- Generator LLM: Produces initial responses and refines them based on feedback

- Evaluator LLM: Analyzes responses and provides detailed feedback for improvement

When to Use:

When to Use:

- Clear evaluation criteria exist

- Iterative refinement provides measurable value

- Tasks benefit from multiple rounds of critique

Usage Example:

Spring AI’s Implementation Advantages

Spring AI’s implementation of these patterns offers several benefits that align with Anthropic’s recommendations:- Uniform interface across different LLM providers

- Built-in error handling and retries

- Flexible prompt management

Best Practices and Recommendations

Based on both Anthropic’s research and Spring AI’s implementations, here are key recommendations for building effective LLM-based systems:-

Start Simple

- Begin with basic workflows before adding complexity

- Use the simplest pattern that meets your requirements

- Add sophistication only when needed

-

Design for Reliability

- Implement clear error handling

- Use type-safe responses where possible

- Build in validation at each step

-

Consider Trade-offs

- Balance latency vs. accuracy

- Evaluate when to use parallel processing

- Choose between fixed workflows and dynamic agents

Future Work

In Part 2 of this series, we’ll explore how to build more advanced Agents that combine these foundational patterns with sophisticated features: Pattern Composition- Combining multiple patterns to create more powerful workflows

- Building hybrid systems that leverage the strengths of each pattern

- Creating flexible architectures that can adapt to changing requirements

- Implementing persistent memory across conversations

- Managing context windows efficiently

- Developing strategies for long-term knowledge retention

- Leveraging external tools through standardized interfaces

- Implementing MCP for enhanced model interactions

- Building extensible agent architectures

Tanzu Gen AI Solutions

VMware Tanzu Platform 10 Tanzu AI Server, powered by Spring AI provides:- Enterprise-Grade AI Deployment: Production-ready solution for deploying AI applications within your VMware Tanzu environment

- Simplified Model Access: Streamlined access to Amazon Bedrock Nova models through a unified interface

- Security and Governance: Enterprise-level security controls and governance features

- Scalable Infrastructure: Built on Spring AI, the integration supports scalable deployment of AI applications while maintaining high performance