GitHub

GitHub

Overview

Spring AI Playground is a self-hosted web UI that simplifies AI experimentation and testing. It provides Java developers with an intuitive interface for working with large language models (LLMs), vector databases, prompt engineering, and Model Context Protocol (MCP) integrations. Built on Spring AI, it supports leading model providers and includes comprehensive tools for testing retrieval-augmented generation (RAG) workflows and MCP integrations. The goal is to make AI more accessible to developers, helping them quickly prototype Spring AI-based applications with enhanced contextual awareness and external tool capabilities.Version: Latest from GitHub

No API keys required when using Ollama - easy to get started!

Key Features

Chat Playground

Unified chat interface with dynamic RAG and MCP integration

VectorDB Playground

Full RAG flows: upload, chunk, embed, search with score details

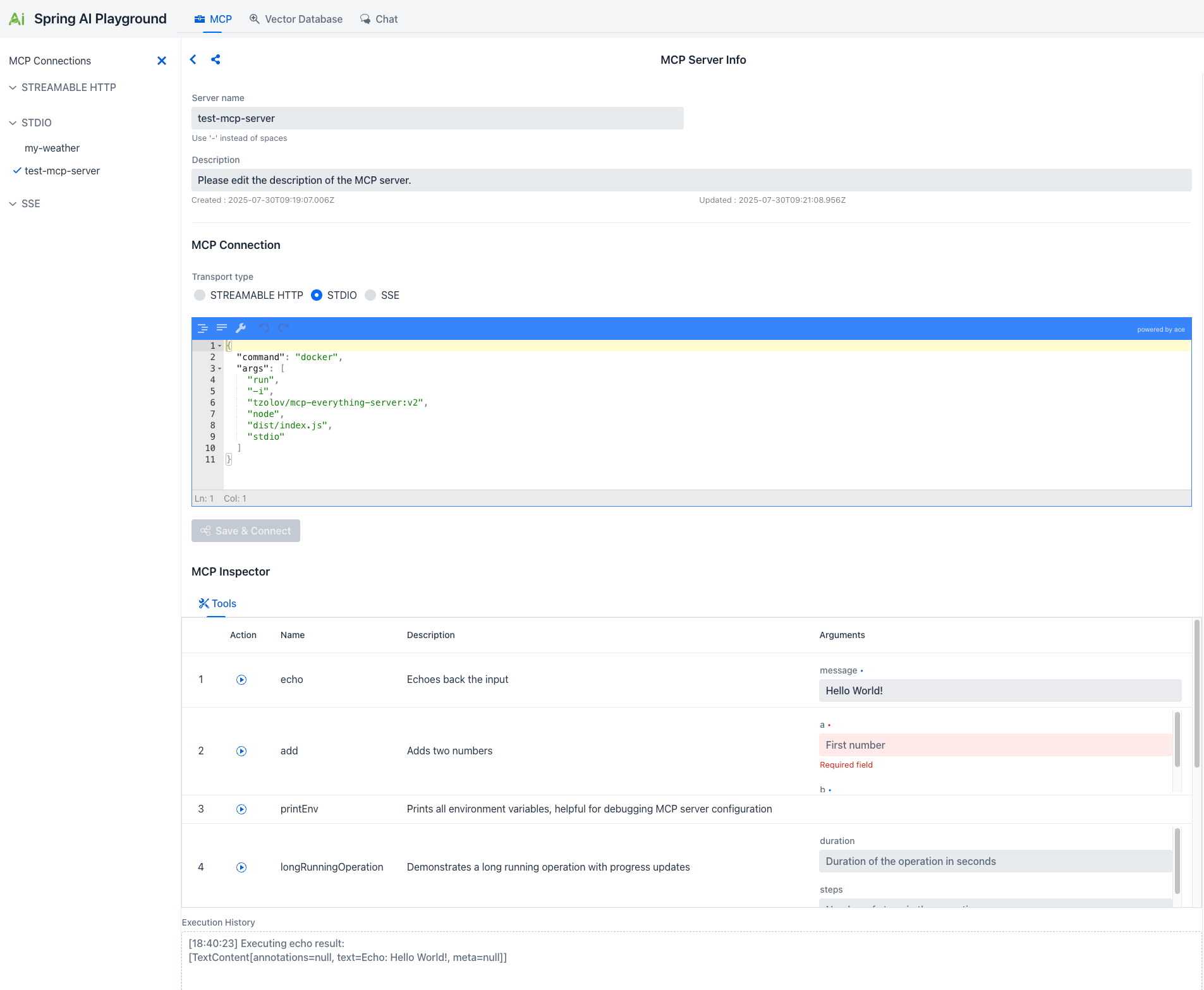

MCP Playground

Register, test, and invoke MCP tools interactively

PWA Support

Progressive Web App for desktop and mobile

Multi-Provider

Ollama, OpenAI, and OpenAI-compatible servers

Vaadin UI

Modern, responsive UI built with Vaadin Flow

Playgrounds

Chat Playground

A unified chat interface powered by Spring AI’s ChatClient that dynamically uses configurations from VectorDB and MCP playgrounds, enabling conversations enriched with retrieval and external tools.

VectorDB Playground

Supports full RAG flows with Spring AI’s

Supports full RAG flows with Spring AI’s RetrievalAugmentationAdvisor:

- Custom Chunk Input: Directly input and chunk custom text for embedding

- Document Uploads: Upload PDFs, Word documents, and PowerPoint presentations

- Search and Scoring: Vector similarity searches with similarity scores (0-1)

- Filter Expressions: Metadata-based filtering (e.g.,

author == 'John' && year >= 2023)

MCP Playground

Comprehensive Model Context Protocol integration:

Comprehensive Model Context Protocol integration:

- Connection Management: Configure MCP connections with STREAMABLE HTTP, STDIO, and SSE transports

- MCP Inspector: Explore available tools with detailed information on arguments and parameters

- Interactive Testing: Execute MCP tools directly with real-time results and execution history

STREAMABLE HTTP (MCP v2025-03-26) is a single-endpoint HTTP transport replacing HTTP+SSE. Clients send JSON-RPC via POST to /mcp with optional SSE-style streaming.

Supported Technologies

AI Model Providers

AI Model Providers

- Ollama - Local LLM with tool-enabled models

- OpenAI - GPT-3.5, GPT-4, and more

- OpenAI-compatible servers - llama.cpp, TabbyAPI, LM Studio

- All Spring AI supported providers - Anthropic, Google, Amazon, Microsoft

Vector Databases

Vector Databases

All Spring AI VectorStore implementations including:

- PostgreSQL/PGVector, ChromaDB, Milvus, Qdrant, Redis

- Pinecone, Weaviate, Elasticsearch, MongoDB Atlas

- Neo4j, OpenSearch, Oracle, Azure Cosmos DB

MCP Transports

MCP Transports

- STREAMABLE HTTP - New single-endpoint transport (MCP v2025-03-26)

- STDIO - Direct process communication (requires local running)

- SSE - Server-Sent Events transport

Quick Start

Prerequisites

- Java 21 or later

- Ollama running on your machine

- Docker (optional, but recommended)

Running with Docker (Recommended)

Linux Users:

host.docker.internal may not be available. Use --network="host" or replace with your host machine’s IP (e.g., 172.17.0.1).Running Locally

PWA Installation

Spring AI Playground supports Progressive Web App installation for a native app-like experience:- Open the application at

http://localhost:8282 - Look for the browser’s PWA install prompt or the “Install PWA” button

- Follow the installation wizard

Chat Using RAG

- Set Up Vector Database: Upload documents through the VectorDB Playground

- Select Documents: Choose documents to use as the knowledge base

- Chat: The system retrieves relevant content and generates knowledge-grounded responses

Chat Using MCP

- Configure MCP Servers: Set up connections in the MCP Playground

- Select Connections: Choose MCP connections in the Chat page

- Chat: The AI automatically uses available MCP tools based on context

Upcoming Features

1

Spring AI Agent

Build production-ready agents combining MCP, RAG, and Chat in unified workflows

2

Observability

Tools to track and monitor AI performance, usage, and errors

3

Authentication

Login and security features to control access

4

Multimodal Support

Embedding, image, audio, and moderation models

Resources

GitHub Repository

View source code and contribute

Spring AI Documentation

Spring AI reference documentation