The Tool Search Tool pattern, pioneered by Anthropic, addresses this: instead of loading all tool definitions upfront, the model discovers tools on-demand. It receives only a search tool initially, queries for capabilities when needed, and gets relevant tool definitions expanded into context. This achieves significant token savings while maintaining access to hundreds of tools.

The key insight: While Anthropic introduced this pattern for Claude, we can implement the same approach for any LLM using Spring AI’s Recursive Advisors.

Spring AI provides a portable abstraction that makes dynamic tool discovery work across OpenAI, Anthropic, Gemini, Ollama, Azure OpenAI, and any other LLM provider supported by Spring AI.

Our preliminary benchmarks show Spring AI’s Tool Search Tool implementation achieves 34-64% token reduction across OpenAI, Anthropic, and Gemini models while maintaining full access to hundreds of tools.

The Tool Search Tool pattern, pioneered by Anthropic, addresses this: instead of loading all tool definitions upfront, the model discovers tools on-demand. It receives only a search tool initially, queries for capabilities when needed, and gets relevant tool definitions expanded into context. This achieves significant token savings while maintaining access to hundreds of tools.

The key insight: While Anthropic introduced this pattern for Claude, we can implement the same approach for any LLM using Spring AI’s Recursive Advisors.

Spring AI provides a portable abstraction that makes dynamic tool discovery work across OpenAI, Anthropic, Gemini, Ollama, Azure OpenAI, and any other LLM provider supported by Spring AI.

Our preliminary benchmarks show Spring AI’s Tool Search Tool implementation achieves 34-64% token reduction across OpenAI, Anthropic, and Gemini models while maintaining full access to hundreds of tools.

The Spring AI Tool Search Tool project is available on: spring-ai-tool-search-tool.

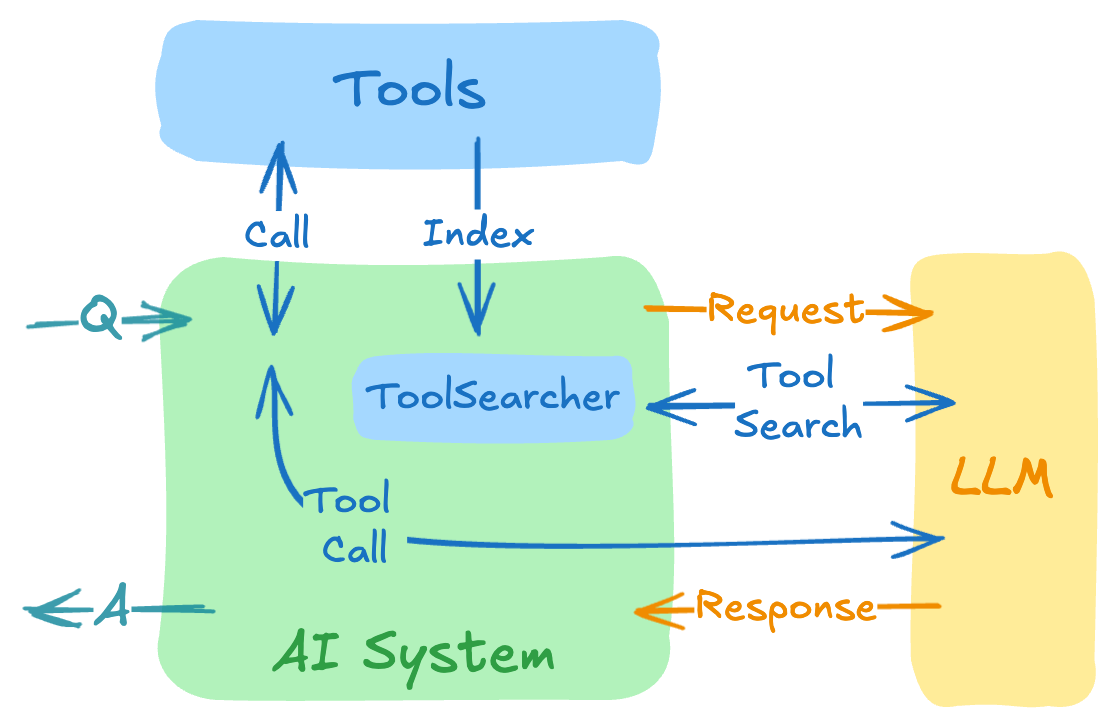

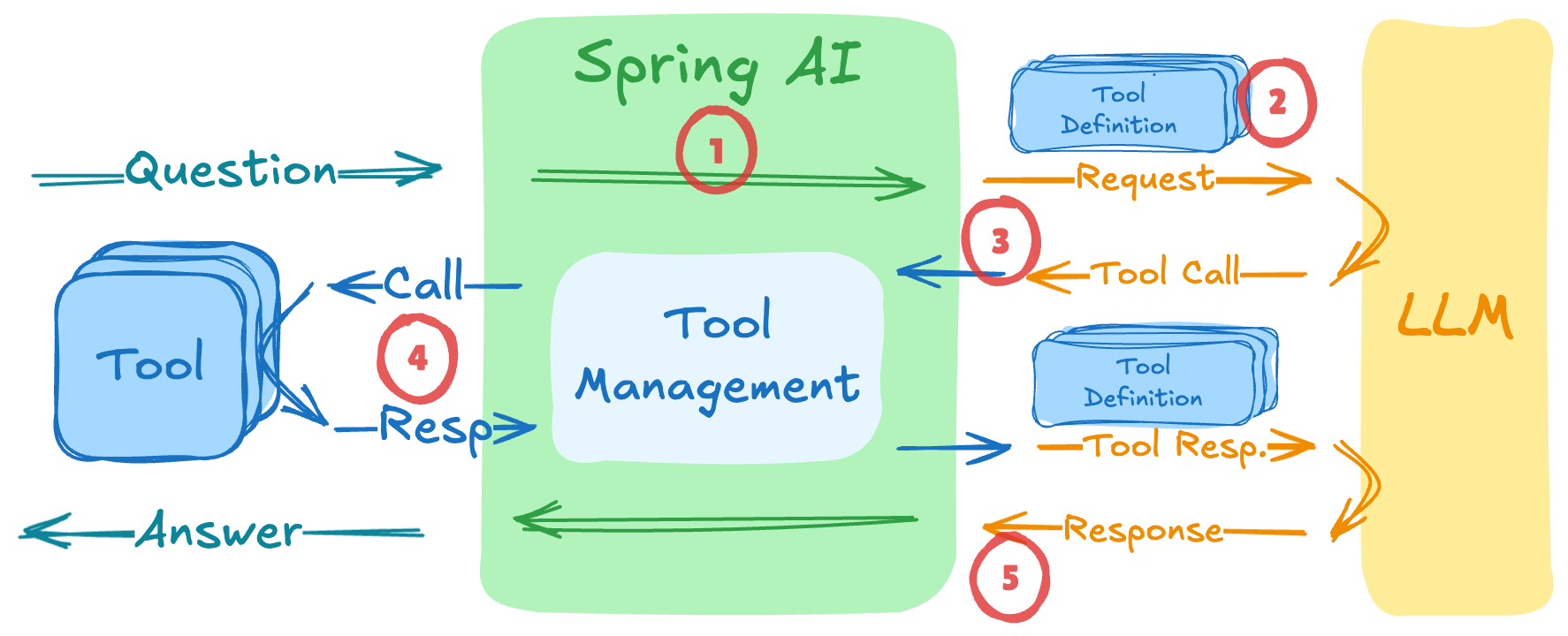

How Tool Calling Works

First, let’s understand how Spring AI’s tool calling works when using the ToolCallAdvisor - a special recursive advisor that:

- Intercepts the ChatClient request before it reaches the LLM

- Includes tool definitions in the prompt sent to the model - for all registered tools!

- Detects tool call requests in the model’s response

- Executes the requested tools using the

ToolCallingManager - Loops back with tool results until the model provides a final answer

The Problem

The standard tool calling flow (such asToolCallAdvisor) sends all tool definitions to the LLM upfront. This creates three major issues with large tool collections:

- Context bloat - Massive token consumption before any conversation begins

- Tool confusion - Models struggle to choose correctly when facing 30+ similar tools

- Higher costs - Paying for unused tool definitions in every request

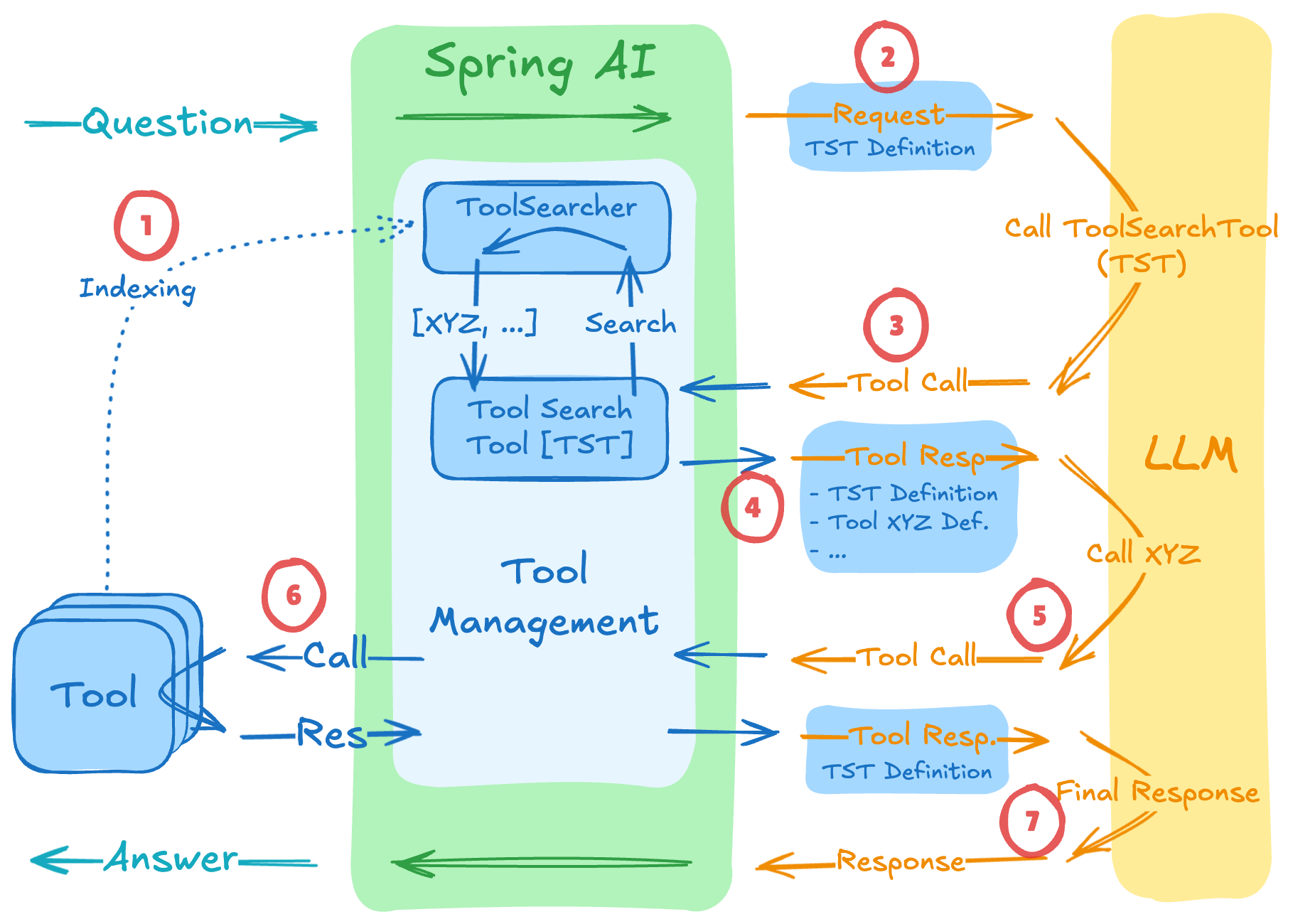

The Tool Search Tool Solution

By extending Spring AI’sToolCallAdvisor, we’ve created a ToolSearchToolCallAdvisor that implements dynamic tool discovery. It intercepts the tool calling loop to selectively inject tools based on what the model discovers it needs:

The flow works as follows:

The flow works as follows:

- Indexing: At conversation start, all registered tools are indexed in the

ToolSearcher(but NOT sent to the LLM) - Initial Request: Only the Tool Search Tool (TST) definition is sent to the LLM - saving context

- Discovery Call: When the LLM needs capabilities, it calls the TST with a search query

- Search & Expand: The

ToolSearcherfinds matching tools (e.g., “Tool XYZ”) and their definitions are added to the next request - Tool Invocation: The LLM now sees both TST and the discovered tool definitions, and can call the actual tool

- Tool Execution: The discovered tool is executed and results returned to the LLM

- Response: The LLM generates the final answer using the tool results

Pluggable Search Strategies

TheToolSearcher interface abstracts the search implementation, supporting multiple strategies (see tool-searchers for implementations):

| Strategy | Implementation | Best For |

|---|---|---|

| Semantic | VectorToolSearcher | Natural language queries, fuzzy matching |

| Keyword | LuceneToolSearcher | Exact term matching, known tool names |

| Regex | RegexToolSearcher | Tool name patterns (get_*_data) |

Getting Started

The project’s GitHub repository is: spring-ai-tool-search-tool. For detailed setup instructions and code examples, see the Quick Start guide (v1.x) and the related example application (v1.x). Maven Central (1.0.1):- User Request: “Help me plan what to wear today in Amsterdam. Please suggest clothing shops that are open right now.”

- Initialization: Index all tools:

weather,clothing,currentTime(+ potentially 100s more) - First LLM Call - LLM sees only

toolSearchTool- LLM calls

toolSearchTool(query="current time date")→["currentTime"]

- LLM calls

- Second LLM Call - LLM sees

toolSearchTool+currentTime- LLM calls

currentTime("Amsterdam")→"2025-12-08T11:30" - LLM calls

toolSearchTool(query="weather location")→["weather"]

- LLM calls

- Third LLM Call - LLM sees

toolSearchTool+currentTime+weather- LLM calls

weather("Amsterdam")→"Sunny, 15°C" - LLM calls

toolSearchTool(query="clothing shops")→["clothing"]

- LLM calls

- Fourth LLM Call - LLM sees

toolSearchTool+currentTime+weather+clothing- LLM calls

clothing("Amsterdam", "2025-12-08T11:30")→["H&M", "Zara", "Uniqlo"]

- LLM calls

- Final Response: “Based on the sunny 15°C weather in Amsterdam, I recommend light layers. Here are clothing shops open now: H&M, Zara, …”

Performance Measurements

⚠️ Disclaimer: These are preliminary, manual measurements taken after a few runs. They are not averaged across multiple iterations and should be considered illustrative rather than representative.To quantify the token savings, we ran preliminary benchmarks using the demo application with the following setup:

- Task: “Help me plan what to wear today in Amsterdam. Please suggest clothing shops that are open right now.”

-

28 total tools: 3 relevant tools (

weather,clothing,currentTime) + 25 unrelated “dummy” tools, deliberately not relevant to the weather/clothing task, demonstrating how the tool search efficiently discovers only the needed tools among many unrelated options. - Search strategies: Lucene (keyword-based) and VectorStore (semantic)

-

Models tested: Gemini (

gemini-3-pro-preview), OpenAI (gpt-5-mini-2025-08-07), Anthropic (claude-sonnet-4-5-20250929)

Results with Lucene Search

| Model | Approach | Total Tokens | Prompt Tokens | Completion Tokens | Requests | Savings |

|---|---|---|---|---|---|---|

| Gemini | With TST | 2,165 | 1,412 | 231 | 4 | 60% |

| Without TST | 5,375 | 4,800 | 176 | 3 | — | |

| OpenAI | With TST | 4,706 | 2,770 | 1,936 | 5 | 34% |

| Without TST | 7,175 | 5,765 | 1,410 | 3 | — | |

| Anthropic | With TST | 6,273 | 5,638 | 635 | 5 | 64% |

| Without TST | 17,342 | 16,752 | 590 | 4 | — |

Results with VectorStore Search

| Model | Approach | Total Tokens | Prompt Tokens | Completion Tokens | Requests | Savings |

|---|---|---|---|---|---|---|

| Gemini | With TST | 2,214 | 1,502 | 234 | 4 | 57% |

| Without TST | 5,122 | 4,767 | 73 | 3 | — | |

| OpenAI | With TST | 3,697 | 2,109 | 1,588 | 4 | 47% |

| Without TST | 6,959 | 5,771 | 1,188 | 3 | — | |

| Anthropic | With TST | 6,319 | 5,642 | 677 | 5 | 63% |

| Without TST | 17,291 | 16,744 | 547 | 4 | — |

Key Observations

- Significant token savings across all models: The Tool Search Tool pattern achieved 34-64% reduction in total token consumption depending on the model and search strategy.

- Prompt tokens are the key driver: The savings come primarily from reduced prompt tokens - with TST, only discovered tool definitions are included rather than all 28 tools upfront.

- Trade-off: More requests, fewer tokens: TST requires 4-5 requests vs 3-4 without, but the total token cost is significantly lower.

- Both search strategies perform similarly: Lucene and VectorStore produced comparable results, with VectorStore showing slightly better efficiency for OpenAI in this test.

- All models successfully completed the task: All three models (Gemini, OpenAI, Anthropic) figured out that they needed to call

currentTimebefore invoking the other tools, demonstrating correct reasoning about tool dependencies. - Different tool discovery strategies: Models exhibited varying approaches—some managed to request all necessary tools upfront, while others discovered them one by one. However, all models leveraged parallel tool calling when possible to optimize execution.

- Older models may struggle: The older model versions may have difficulty with the tool search pattern, potentially missing required tools or making suboptimal discovery decisions. Consider adding a custom systemMessageSuffix to provide additional guidance to the model, experiment with different tool-searcher configurations or pair this approach with the LLM as Judge pattern to ensure reliable and consistent behavior across different models.

When to Use

| Tool Search Tool Approach | Traditional Approach |

|---|---|

| 20+ tools in your system | Small tool library (<20 tools) |

| Tool definitions consuming >5K tokens | All tools frequently used in every session |

| Building MCP-powered systems with multiple servers | Very compact tool definitions |

| Experiencing tool selection accuracy issues |

Next Steps

As the Tool Search Tool project matures and proves its value within the Spring AI Community, we may consider adding it to the core Spring AI project. For deterministic tool selection without LLM involvement, explore the Pre-Select Tool Demo and the experimentalPreSelectToolCallAdvisor.

Unlike the agentic ToolSearchToolCallAdvisor, this advisor pre-selects tools based on message content before the LLM call—ideal for Chain of Thought patterns where a preliminary reasoning step explicitly names the required tools.

Also, you can consider combining the Tool Search Tool with LLM-as-a-Judge patterns to ensure discovered tools actually fulfill the user’s task. A judge model could evaluate whether the dynamically selected tools produced satisfactory results and improve the tool discovery if needed.

Try the current implementation and provide feedback to help shape its evolution into a first-class Spring AI feature.

Conclusion

The Tool Search Tool pattern is a step toward scalable AI agents. By combining Anthropic’s innovative approach with Spring AI’s portable abstraction, we can build systems that efficiently manage thousands of tools while maintaining high accuracy across any LLM provider. The power of Spring AI’s recursive advisor architecture is that it allows us to implement sophisticated tool discovery workflows that work universally - whether you’re using OpenAI’s GPT models, Anthropic’s Claude, local Ollama models, or any other LLM supported by Spring AI. You get the same dynamic tool discovery benefits without being locked into a specific provider’s native implementation.References

- Anthropic Tool Search Tool Pattern: Advanced Tool Use

- Spring AI Tool Search Tool Implementation: GitHub Repository

- Spring AI Tools Documentation: Tools API Reference

- Spring AI Recursive Advisors: Advisors API Reference

- Spring AI Recursive Advisors Blog: Spring AI Recursive Advisors